Introducing Mailgun’s open-source MCP server

Navigating email data just got easier. With Mailgun’s open-source MCP server, developers can query email analytics using conversational AI. No dashboards, no waiting.

PUBLISHED ON

Accessing email data just got easier. With Mailgun’s open-source MCP server, developers can query email performance metrics using natural language—no dashboards, no complex API calls. Built on Model Context Protocol (MCP), this solution makes AI-powered email analytics seamless and efficient.

Here’s how it happened, and how it works.

What is MCP?

MCP, or Model Context Protocol, is a standardized API framework that allows AI assistants to interact with external services using JSON-RPC.

A few months ago, Anthropic released its Model Context Protocol (MCP), enabling AI assistants like Claude to interact with external services through standardized API calls.

In practical terms, it enables natural language interactions with structured data sources like Mailgun’s email analytics APIs.

Check it out on GitHub at github.com/mailgun/mailgun-mcp-server.

Email analytics meets conversational AI

As developers, we're constantly looking for more efficient ways to access and analyze our data. Whether checking delivery rates, investigating bounces, or monitoring domain performance, these tasks traditionally require navigating dashboards or writing custom API scripts. MCP opens the door to something different: getting your data by simply asking for it.

We’re excited to announce Mailgun's open-source MCP server implementation, which bridges this gap by allowing developers to query our read-only APIs directly through Claude and other MCP-compatible assistants.

Why MCP?

Email is unique in being a truly distributed self-governing system. It has been built on open standards that are documented inside the RFCs (Requests for Comments) that are published by the Internet Engineering Task Force (IETF). From SMTP to MIME to DMARC these standards help emails reach their destinations. The ecosystem works because we all agreed on how things should talk to each other.

MCP is built on top of JSON-RPC over an application layer transport, which are well known and documented standards. Adopting it feels like a natural next step following the tradition of open protocols.

By implementing this standard for Mailgun, we're continuing our commitment to an environment where engineers can build systems without getting trapped in walled gardens. Just as you can send an email to anyone on the planet without thinking twice (please don’t send spam), now you can talk to your data through whatever assistant works best for you.

Image Source: https://xkcd.com/2116/

What can you do with Mailgun’s MCP server?

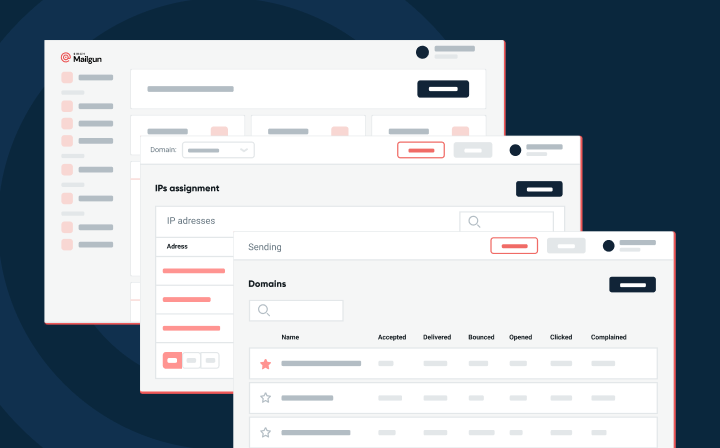

With our MCP server implementation, you can:

Query email performance metrics through natural language

Pull delivery statistics, bounce logs, and domain settings

Generate reports and visualizations of your email data

Send emails or validate email addresses

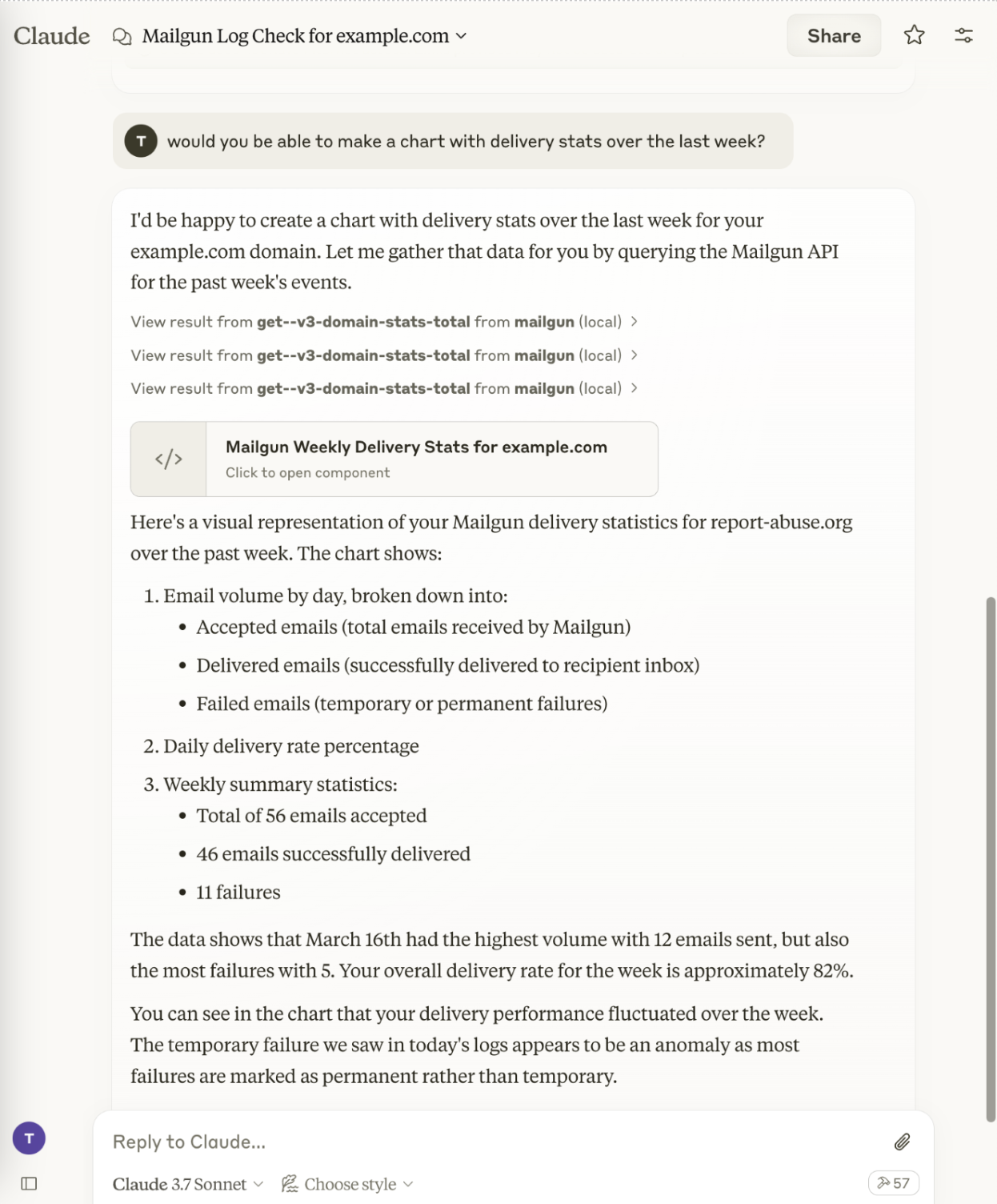

See it in action

Here's a glimpse of the kind of metrics you can pull using our MCP and generative AI:

Why read-only

We've intentionally limited this implementation to read-only operations (except sending an email). This gives you the freedom to explore your data and configuration without risking accidental changes to your production environment. However, the way it's designed, it's easy to enable endpoints that perform non-idempotent requests if your use case calls for it.

Why conversational interfaces are the future

We believe infrastructure tools should adapt to how developers get the work done. Conversational interfaces reduce time spent translating questions into complex API queries by using natural language as a bridge.

Mailgun’s MCP implementation reduces cognitive overhead, enabling engineers to:

Get immediate insights without navigating dashboards

Request specific email metrics without writing custom code

Improve workflow efficiency with AI-driven queries

Get started today

Our MCP server implementation is available on GitHub at github.com/mailgun/mailgun-mcp-server. We've included setup steps into the README to help you get up and running quickly.

We're excited to see what’s next, and welcome contributions and feedback from the community.